Automate tests

Many behavioural tests are straightforward, with ANY-maze simply tracking the animal’s movements. However, tests such as Operant or Fear conditioning can include complex rules for what should happen as time passes or if the animal does certain things.

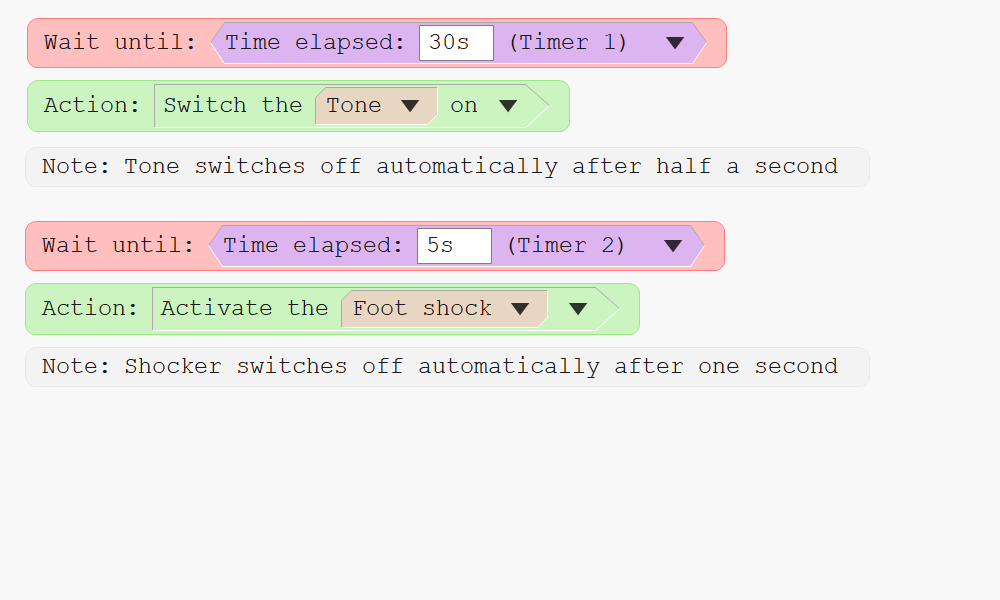

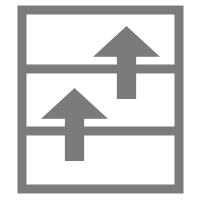

ANY-maze uses procedures to define these rules. Procedures are built using drag-and-drop from a few simple to understand statements. For example, the procedure on the right specifies that 30 seconds after the test starts a tone should be played, then 5 seconds after that a shock should be administered.

Making decisions

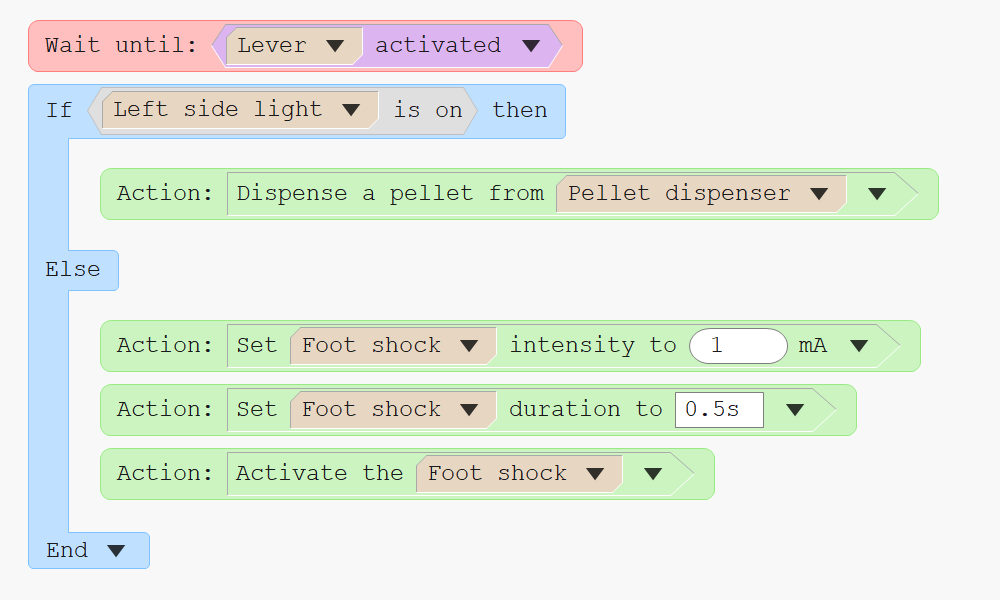

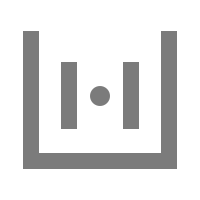

In tests such as Operant conditioning, the animal may be rewarded for making a correct choice – perhaps it should press a lever only when a light on the left side of the cage is lit; if it does this it receives a reward, otherwise a mild foot shock is administered.

It’s easy to set up rules such as this using procedures – see the example on the right.

Use multiple procedures

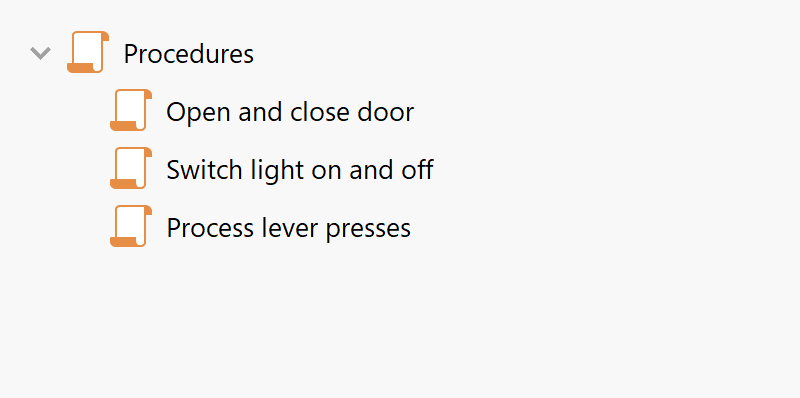

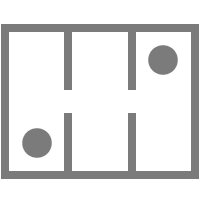

Complex Operant conditioning tests can include many devices, for example, doors separating multiple compartments, with lights, levers and pellet dispensers in each compartment. The rules governing how such tests are performed are usually correspondingly complicated.

Writing a single procedure to automate these tests can be difficult, but breaking the rules into different procedures can make things dramatically simpler. ANY-maze allows you to create any number of procedures, all of which are processed simultaneously.

Procedure features

- Simple drag and drop interface

- Just 8 principal statements

- Procedures can respond to over 50 different events

- Procedures can perform more than 70 different actions

- Full control of all the I/O devices that ANY-maze supports

- Wide range of maths functions available

- Functions to generate random numbers

- Support for variables, including arrays

- Variables can be stored between tests

- Numeric variables can be saved as part of a test's results

- Procedures are checked when you write them and errors are highlighted and explained

- Errors that occur while a test is running are reported at the time and recorded with the test

Setting up apparatus

Setting up apparatus Video capture & tracking

Video capture & tracking Observing behaviour

Observing behaviour Connecting equipment

Connecting equipment Automating complex tests

Automating complex tests Running tests

Running tests Results

Results Visualising data

Visualising data Analysis

Analysis Transferring data

Transferring data Open field

Open field Water-maze

Water-maze Y-maze

Y-maze Fear conditioning

Fear conditioning Novel object

Novel object Barnes maze

Barnes maze Radial arm maze

Radial arm maze Light/dark box

Light/dark box Operant conditioning

Operant conditioning Zebrafish

Zebrafish Computers

Computers Multifunction remote

Multifunction remote Accessories

Accessories Digital interface

Digital interface Optogenetic interface

Optogenetic interface Synchronisation interface

Synchronisation interface Relay interface

Relay interface Audio interface

Audio interface Touch interface

Touch interface Analogue interface

Analogue interface USB TTL cable

USB TTL cable Animal shocker

Animal shocker Components

Components Place preference

Place preference ANY-box

ANY-box T-maze

T-maze Zero maze

Zero maze Hole board

Hole board Sociability cage

Sociability cage OPAD

OPAD RAPC

RAPC Waterwheel forced swim test

Waterwheel forced swim test Thermal gradient ring

Thermal gradient ring Operon

Operon Activity Wheel

Activity Wheel Full ANY-maze licence

Full ANY-maze licence Other licence types

Other licence types Developing countries licence

Developing countries licence Contact support

Contact support Support Policy

Support Policy FAQs

FAQs Guides

Guides Downloads

Downloads Send us files

Send us files Activate a licence ID

Activate a licence ID Contact us

Contact us Blog

Blog About

About Testimonials

Testimonials Privacy Policy

Privacy Policy